Authors

Jacinto C. Nascimento, Gustavo Carneiro

Introduction

The HEARTRACK is part of several publications. The publications are related to as many topics as follows. Segmentation and Tracking of the Human Heart in 2D and 3D Ultrasound Data based on a Principled Combination of the Top-down and Bottom-up Paradigms. Self-retraining and Co-training to Reduce the Training Set Size in Deep Learning Methods.

Description

Segmentation and Tracking of the Human Heart in 2D and 3D Ultrasound Data based on a Principled Combination of the Top-down and Bottom-up Paradigms. The project presents a novel methodology for nonrigid object segmentation. The methodology proposed combines the deep learning architecture with the use of manifold learning. The main contribution and focus of the article is the dimensionality reduction of the segmentation contour parametrization for the rigid components. A manifold learning based approach has been proposed and allows to reduce the dimension of the rigid space. Thus, the framework allows for a faster running time in both training and segmentation stages.

Combining Multiple Dynamic Models and Deep Learning Architectures for Tracking the Left Ventricle Endocardium in Ultrasound Data. A new statistical pattern recognition approach for the problem of the left ventricle endocardium tracking in ultrasound data is presented. The problem is formulated as a sequential importance resampling algorithm such that the expected segmentation of the current time step is estimated based on the appearance, shape, and motion models that take into account all previous and current images and previous segmentation contours produced by the method. Using a training set comprising diseased and healthy cases, we show that our approach produces more accurate results than current state-of-the-art endocardium tracking methods in two test sequences from healthy subjects. Using three test sequences containing different types of cardiopathies, we show that our method correlates well with inter-user statistics produced by four cardiologists. See more in TPAMI 2013 Paper and TIP 2012 Paper.

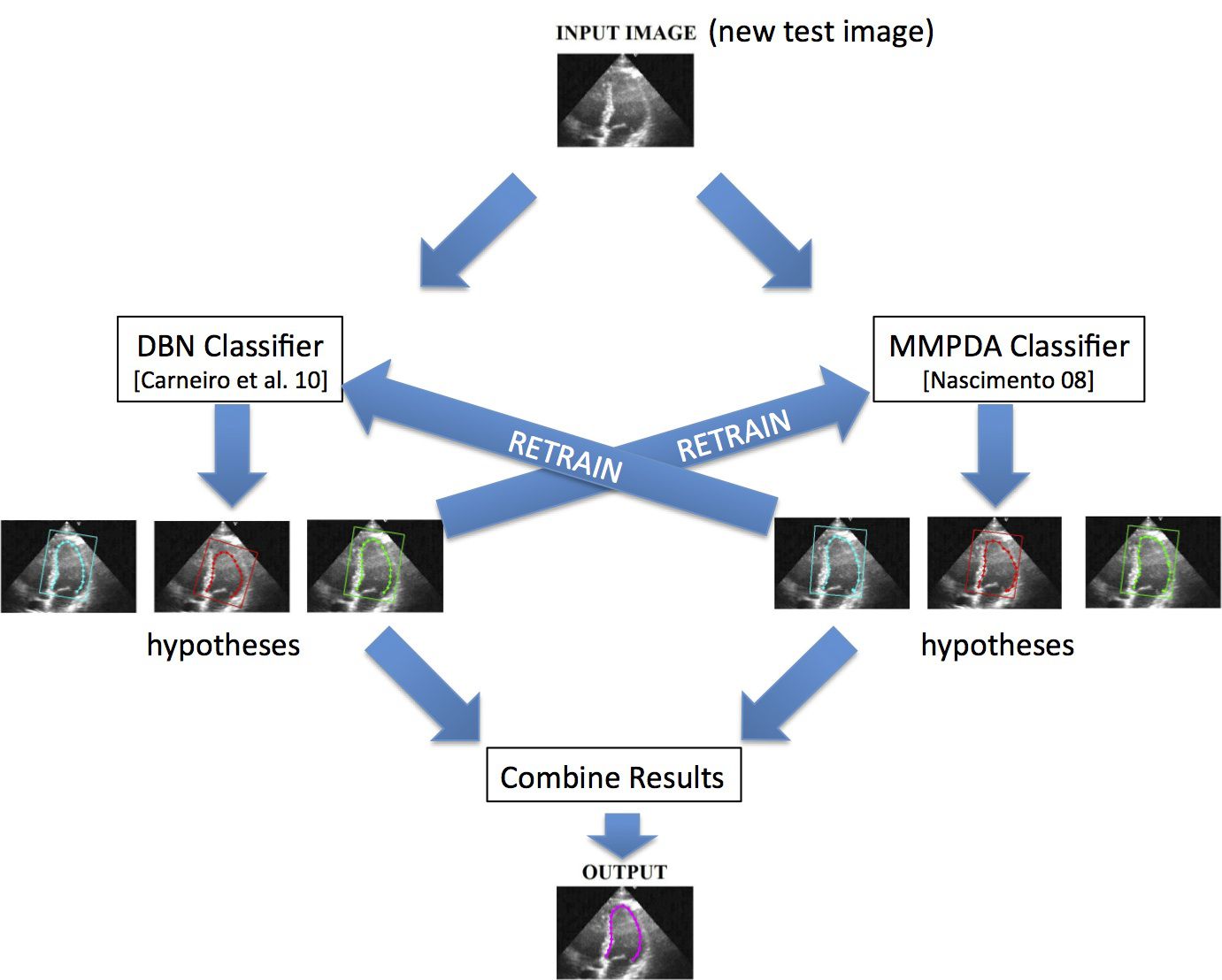

Self-retraining and Co-training to Reduce the Training Set Size in Deep Learning Methods. The use of statistical pattern recognition models to segment the left ventricle of the heart in ultrasound images has gained substantial attention over the last few years. The main obstacle for the wider exploration of this methodology lies in the need for large annotated training sets, which are used for the estimation of the statistical model parameters. In this paper, we present a new on-line co-training methodology that reduces the need for large training sets for such parameter estimation. Our approach learns the initial parameters of two different models using a small manually annotated training set. Then, given each frame of a test sequence, the methodology not only produces the segmentation of the current frame, but it also uses the results of both classifiers to re-train each other incrementally. This online aspect of our approach has the advantages of producing segmentation results and re-training the classifiers on the fly as frames of a test sequence are presented, but it introduces a harder learning setting compared to the usual off-line co-training, where the algorithm has access to the whole set of un-annotated training samples from the beginning. Moreover, we introduce the use of the following new types of classifiers in the co-training framework: deep belief network and multiple model probabilistic data association. We show that our method leads to a fully automatic left ventricle segmentation system that achieves state-of-the-art accuracy on a public database with training sets containing at least twenty annotated images. See more in ICCV 2011 Paper and CVPR 2012 Paper.

Non-rigid Segmentation using Sparse Low dimensional Manifolds and Deep Belief Networks. The project proposes a new combination of deep belief networks and sparse manifold learning strategies for the 2D segmentation of non-rigid visual objects. With this novel combination, we aim to reduce the training and inference complexities while maintaining the accuracy of machine learning based non-rigid segmentation methodologies. Typical non-rigid object segmentation methodologies divide the problem into a rigid detection followed by a non-rigid segmentation, where the low dimensionality of the rigid detection allows for a robust training (i.e., a training that does not require a vast amount of annotated images to estimate robust appearance and shape models) and a fast search process during inference. Therefore, it is desirable that the dimensionality of this rigid transformation space is as small as possible in order to enhance the advantages brought by the aforementioned division of the problem. In this paper, we propose the use of sparse manifolds to reduce the dimensionality of the rigid detection space. Furthermore, we propose the use of deep belief networks to allow for a training process that can produce robust appearance models without the need of large annotated training sets. We test our approach in the segmentation of the left ventricle of the heart from ultrasound images and lips from frontal face images. Our experiments show that the use of sparse manifolds and deep belief networks for the rigid detection stage leads to segmentation results that are as accurate as the current state of the art, but with lower search complexity and training processes that require a small amount of annotated training data. See more in CVPR 2013 Paper, CVPR 2014 Paper and TIP 2017 Paper.